Written By

Roshan Desai, Joachim Rahmfeld

Published On

Feb 19, 2026

Benchmarking agentic RAG on workplace questions

TL;DR

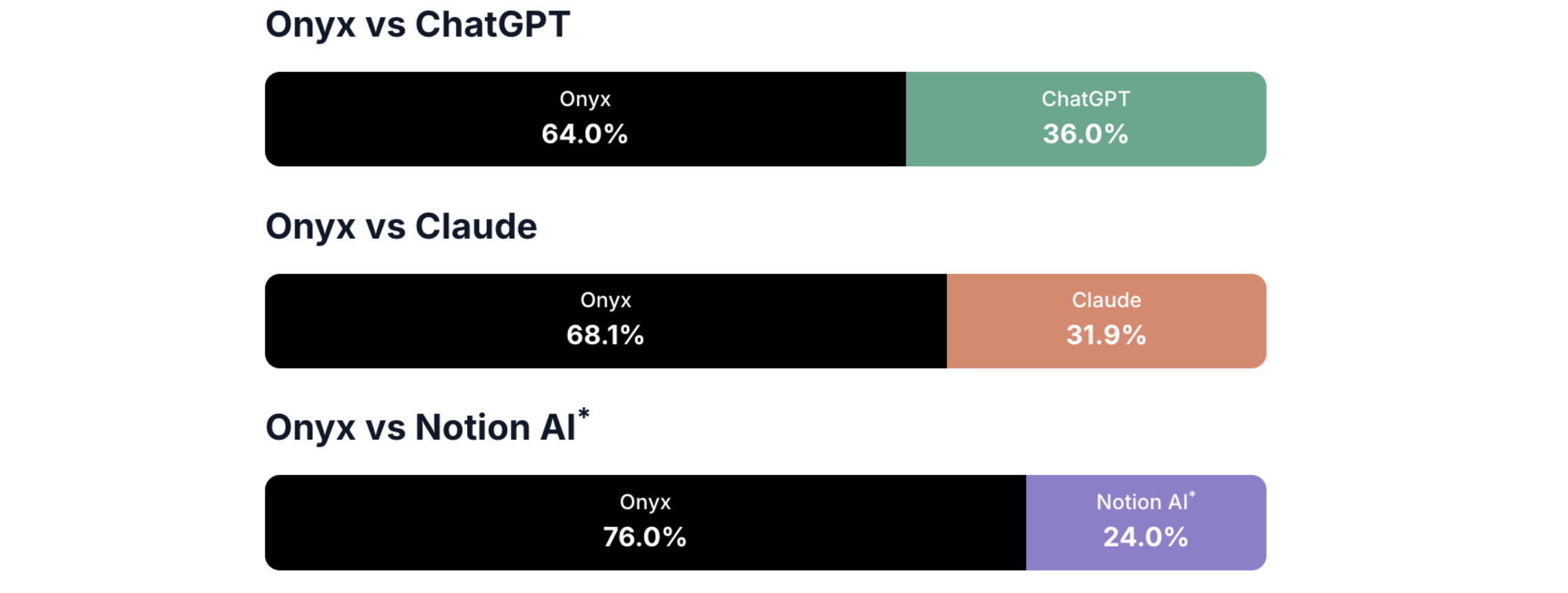

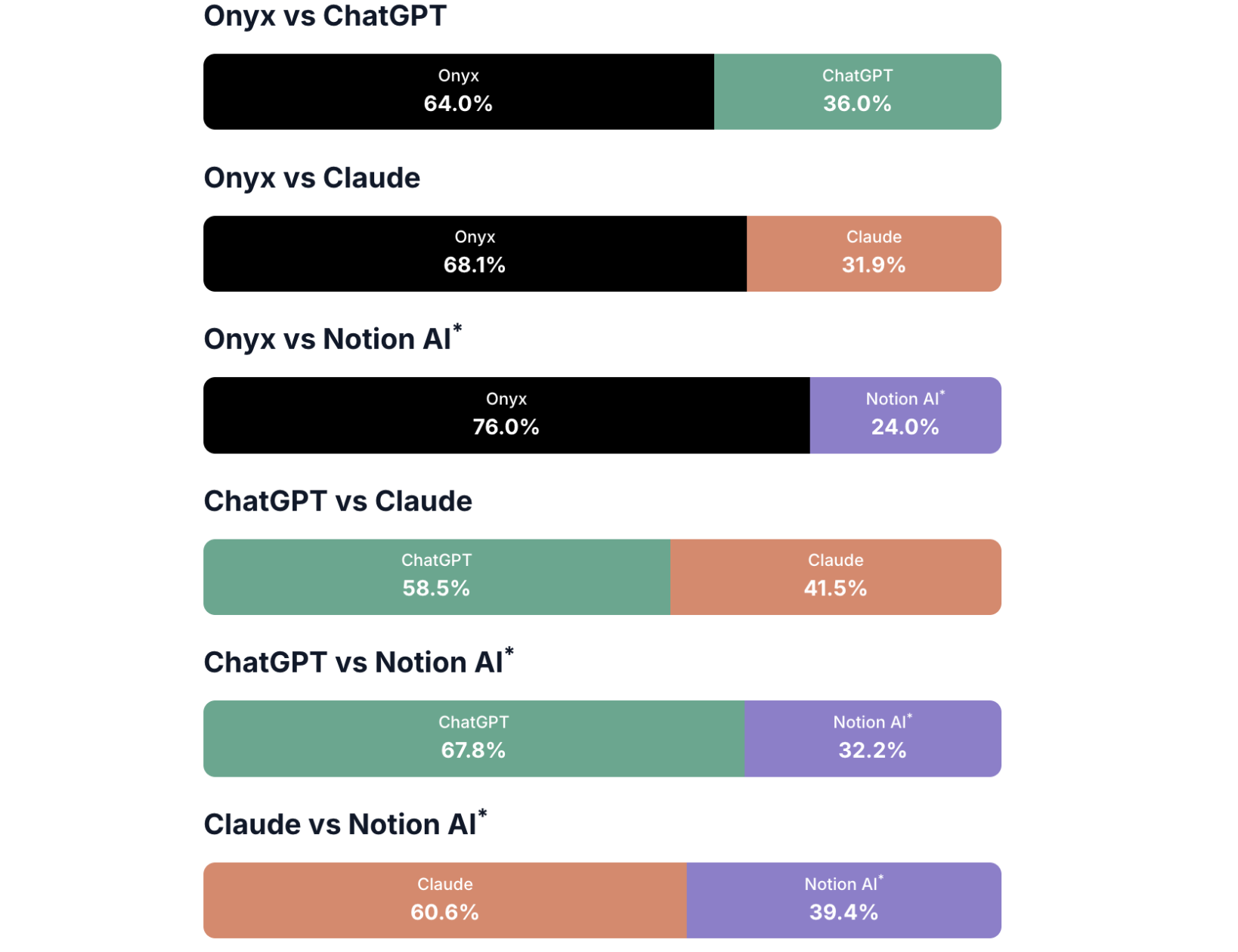

We compared Onyx against ChatGPT Enterprise, Claude Enterprise, and Notion AI on a set of real-world enterprise-style questions spanning our company’s internal and web data across various complexity types. Overall, Onyx outperformed the other vendors by a ~2:1 margin.

Onyx achieved averaged win rates of 64.0% : 36.0% vs ChatGPT Enterprise, 68.1% : 31.9% vs Claude Enterprise, and 73.9% : 26.1% vs Notion AI (on a reduced question set), derived from multiple distinct LLM-as-a-Judge methodologies.

Table 1: Overall Win Rates [ties are counted as ½ wins; N=99 except for comparisons with Notion AI (N=74)]

Onyx also showed the lowest time-to-last-token, with a 34.7s average response time vs 36.2s (Claude Enterprise), 45.4s (ChatGPT Enterprise), and 46.7s (Notion AI).

These results complement and line up well with Onyx’ recent excellent Deep Research benchmark results using DeepResearch Bench.

We share the benchmark questions (with some redactions), our LLM-as-a-judge prompts, and scores for all questions in our repo.

1. Introduction

Evaluating modern Agentic RAG systems is as interesting as it is important. And it is rather challenging.

Answer quality directly affects whether users trust and adopt the tool. A bad or incomplete answer is often worse than no answer at all. At the same time, a more thorough answer that takes minutes to compile - versus tens of seconds - may not be preferred either. Lastly, "quality" depends heavily on the types of questions asked, the documents available to the system, and who is asking.

RAG also has evolved substantially since the early days. Initially, systems largely retrieved relevant chunks from internal documents and had an LLM generate an answer from them. Today, agentic systems decompose answering into multiple steps: issuing sub-questions, using multiple tools, identifying gaps in retrieved information, asking follow-up questions behind the scenes, etc., and synthesizing a final response. At the extreme end, Deep Research approaches explore multiple substantial research threads in parallel and/or iteratively, reasoning over sources for 10+ minutes before producing a final report.

Unfortunately, no public benchmarks that we have found adequately represent what enterprise customers actually need as most of them are set up to only use web data. These results, while interesting, have limited relevance for enterprise teams deploying (or evaluating) an agentic search and chat tool over the relevant knowledge sources, which crucially include both internal data and web information.

At Onyx, we've revisited this problem multiple times over the past couple of years and have run multiple internal benchmarks. Some focused more narrowly on search quality using synthetic questions over a largely synthetic document corpus. Another test compared our Deep Research implementation against a competitor’s solution using questions generated by the Onyx team, evaluated via blind comparisons by team members. However, especially for the search benchmark tests, we never felt that the results were as realistic and customer-relevant as we’d like.

We therefore decided to create a new internal benchmark test that we believe is much more representative of actual customer needs: it is based on real data and informed by actual customer questions. It covers questions of varying complexity over a corpus of internal and web data. We share details, to the degree possible, here. We also provide summary metrics and a clear methodology below.

A note on fairness and limitations

To address the main question right on: yes, Onyx designed this benchmark, wrote the questions, selected the data sources, and defined the evaluation criteria. While we believe our approach provides the most realistic comparison we have available, we certainly recognize the potential for an inherent conflict of interest. To mitigate this to the extent possible:

- We employed four independent judging methodologies and two different judging models (GPT-5.2 and Opus 4.), and reported mean win rates from the combinations plus standard deviations for key metrics.

- The two judging LLMs evaluate answers without knowing which vendor produced them

- We publish the full question set (with some redactions), rubrics, and some answers so others can inspect some of our our work [link]

- We conducted human spot-checks across questions and judge outputs to verify alignment

- We are in the process of building a fully synthetic, open dataset so the community can independently test systems and evaluate results

In general, it would be desirable to also conduct human double-blind comparisons, ideally with external human judges. As we are using internal data, we opted in this case against this additional comparison.

We also acknowledge that our question count (N=99) introduces statistical uncertainty, and that our internal dataset is smaller than that of many of our customers.

With that context, let's get into the specifics.

2. Complexities of Agentic RAG Benchmarking

The problem of creating a suitable benchmark that reflects customer experiences is more complicated than it may first appear. We'll focus here on key design decisions.

What is a "suitable" set of questions?

Given the rapidly increasing abilities of AI-based systems, users often don't realize what's actually possible, habitually asking for far less than the technology can deliver.

A benchmark based purely on questions modeled after "current user questions" would therefore underestimate actual user intent over the near future. Instead, a good benchmark should approximate what users would realistically want to ask once they discover and get used to the full range of what's possible. We designed a mix of questions spanning a broad range of complexities: from simple fact lookups like "Who founded Onyx?" to complex multi-step analytical questions like "how to improve sales opportunity with customer xyz?". This led to 99 questions that, in our view, reflect what customers could realistically expect to be answered well. See repo for details.

What is a "good" answer?

We define answer quality as a combination of correctness, completeness, faithfulness, ability to handle uncertainty, and clarity. A solid assessment must consider all of these and attach realistic weights to them. Better yet, one should use something more nuanced than a simple weighted sum. We balanced these dimensions across multiple judging approaches; more details can be found in Section 3.

Another important aspect for agentic systems is the trajectory the system takes to arrive at an answer, which we call the ‘flow’. A strong flow is generally better aligned with the user's expectations (and hence easier to verify), tends to be more token efficient, and generally leads to better answers. As the flows are not always available however, we judge flow alignment through how the answers reflect it.

What are "good" tools and data sources?

Many RAG-type benchmarks focus only on web search. While convenient, open, and replicable, benchmarks based on web data alone do not reflect what matters most to enterprise users. For most RAG deployments, internal data is the primary target and it is significantly more complex. Organizations store information across multiple systems and document structures vary widely (a Standard Operating Procedure is very different from a call transcript, for example). For this benchmark, we used both Web and Internal Searches, where Internal Searches included only document types that all compared systems support for a given question.

What is a "good" internal dataset?

This is a key question. It is essential to cover data sources customers rely on to do their jobs — common examples include call transcripts, email, cloud drives, etc. Due to the lack of suitable public data that simulates this environment, we decided to use for now a subset of our own internal data, spanning about 220k documents. Consequently, we cannot release this dataset.

3. Benchmark Setup

Below we illustrate the high-level benchmark setup and provide more details.

The evaluated systems were:

- Onyx's most recent version using GPT-5.2 as a base model

- ChatGPT Enterprise (GPT-5.2)

- Claude Enterprise (Opus 4.5)

- Notion AI (Auto)

The tests were implemented through browser automation using Playwright.

What is not included in this benchmark is:

- Our more advanced Deep Research and Craft modes

- Memory and personalization — for any vendor's solution

While both of these are expected to improve answer quality, we deliberately excluded them to evaluate core agentic search performance.

3.1 Tools & Sources

We compared the vendors with both web search and access to a subset of our internal documents enabled via connectors.

The common connectors used for internal sources were:

- GitHub

- Gmail

- Google Drive

- Slack

- Linear

In addition, for all vendors except Notion, we also leveraged:

- Fireflies

- HubSpot

Call transcripts and sales data are essential to generating actionable, high-value insights.

3.2 Questions

We created a test set of 99 questions that we framed after real customer questions and questions we anticipate they will want to pose to the system.

Examples include:

- “I'm using version 2.11.0, how do I ingest documents programmatically?"

- “How do I upgrade [customer xyz]’s instance?”

- “How should I prioritize my first 10 enterprise companies for outreach?”

- “How do enterprise buyers evaluate AI knowledge search solutions?”

- “What common pains usually come up in discovery calls with prospects?”

- “Tell me what customers said about us last month”

- “How to improve sales opportunity with [customer xyz]?”

Overall, the question set has the following distribution across source categories:

| Category | Source Family Description | # Questions | Percentage |

|---|---|---|---|

| Internal Knowledge | Internal knowledge is required and sufficient to answer the question | 50 | 50.5% |

| Public Knowledge | Public knowledge - through web searches or the LLM as is - is needed and sufficient | 27 | 27.3% |

| Hybrid | Both, public and internal information are required to answer the question fully | 12 | 12.1% |

| Agnostic | Either public or internal sources are expected to independently have the information | 5 | 5.1% |

| Undefined | The question is not well enough defined for even the source type to be decided | 5 | 5.1% |

The `Undefined` category was included to see how well the system deals with substantially under-defined questions, like “What did we decide?”. Generally, users would not want the Assistant to report on some decision taken about something, but rather return something like “I do not have enough information to answer this question.”, or “Decision about what?”.

In addition, each question carries one or more characteristic tags:

| Tag | Tag Description | # Questions | Percentage |

|---|---|---|---|

| Answer-Focus | The user does not expect just a fact to be found and restated, but a fully formed answer using identified facts to be given. | 72 | 72.7% |

| Reasoning-Heavy | To provide a satisfactory answer the system needs to perform judging, categorizing, and/or other non-trivial reasoning tasks. | 30 | 30.3% |

| Ambiguity | The question is somewhat loosely defined or ambiguous. | 24 | 24.2% |

| Fact-Lookup | The user request is essentially a search for an individual fact that then is expected to be stated directly. | 18 | 18.2% |

| Abstract-Entity-Understanding | The question is asking about more abstract entities (example: "use cases") that are not fully defined, often also requiring the system to model the categories. | 15 | 15.2% |

| SQL-Type | The question is "like a verbalized SQL statement", Or has major components of that type | 7 | 7.1% |

| Single-Doc-Request | The question specifically targets a single existing document. | 6 | 6.1% |

| Multi-Hop | The system will need to perform iterative tool calls/searches where future searches are informed by the results of earlier ones. | 5 | 5.1% |

3.3 Judgments

Judging answer quality is inherently difficult and subjective beyond a certain point. Our approach is to employ four independent LLM-as-a-Judges to verify robustness. Across all of them:

- Vendor names are not provided to the judges and the order was randomized

- Citations were removed as different vendors handle citations differently.

- Where applicable, scores are on a 1–10 scale (normalized for Judge 2; see below).

- All judges were evaluated with two models, GPT-5.2 and Claude Opus 4.5

- For Judges 1 - 3, final win rates were computed by first averaging each vendor's scores across all three judges and both judging models for a given question, then comparing the averaged scores pairwise.

Here are the details for the four judges:

Judge 1: Gold Facts & Behaviors, Graded on Scale

Rather than providing full "gold answers”, we defined for each question where meaningful:

- A Gold Flow — how we expect the agentic RAG system to approach the question

- Gold Facts — directional facts we would expect a good answer to include

Each vendor's answer was graded independently against these expectations.

Judge 2: Gold Facts & Behaviors, Pass/Fail

This approach also judged answers against the gold information, but used a binary pass/fail criterion applied independently to each rubric item. Scores were then normalized to the 1–10 range.

Judge 3: Relative Judging

Rather than comparing against a gold standard, this judge viewed all vendor responses at once (anonymized and randomly ordered) and scored each based on its quality relative to the others. The underlying assumption: facts shared across multiple responses are likely accurate, so the collective serves as a proxy for ground truth.

Judge 4: Pairwise Preference Judging [excluding Notion AI]

Similar to Judges 1 and 2, gold flows and facts were provided as well as general target metrics. The judging LLMs were asked to rank the Onyx answer vs the competitor’s answer.

These approaches certainly do not fully guard against hallucinations or missing critical information, but we believe, supported by our human spot-checks, that collectively they produce sufficiently robust results that are both reasonably grounded and not overly dependent on any single judge's specifics.

Our overall metric categories with corresponding weights applied across all judges were:

- Correctness: 40%

- Completeness: 30%

- Uncertainty Handling: 20%

- Faithfulness to Context: 5%

- Clarity and Format: 5%

Note that we are not explicitly considering retrieval quality as an independent metric in the benchmark. We view retrieval as a backend process that will inform the answer, and it will be implicitly scored through the existing metrics.

The detailed prompts and metrics for the judges can be found in the repo.

3.4 The Dataset

We are using a subset of our internal data for this benchmark test. It includes about 220k documents across all sources: 2750 Fireflies call transcripts, 8k+ Github PRs and Issues, about 190k emails, 7k+ Google Drive documents, 2k+ Linear tickets, 10k+ Slack messages, and 12k+ HubSpot companies, deals, and contacts). We cannot make this critical component of the tests public.

4. Results

Overall, we found that Onyx outperformed our competitors approximately by a 2:1 margin.

Table 4: Overall Win Rates (N=99 for Onyx, ChatGPT, Claude, and *N=74 and Judges 1-3 for Notion AI comparisons)

Note that ties are not uncommon; we accounted for them by giving each of the compared systems ½ of a win. We should state that the four judges were reasonably consistent. Their standard deviation for the Onyx win rate was 7.0%/3.4% for the ChatGPT/Claude comparisons respectively. Details can be found here.

It is also worth examining results across categories and tags:

| Onyx versus ChatGPT | Onyx vs Claude | GPT vs Claude | N | |

|---|---|---|---|---|

| Overall | 64.0% : 36.0% | 68.1% : 31.9% | 58.5% : 41.5% | 99 |

| Category | Onyx versus ChatGPT | Onyx vs Claude | GPT vs Claude | N |

|---|---|---|---|---|

| Internal Knowledge | 65.5% : 34.5% | 69.4% : 30.6% | 60.1% : 39.9% | 50 |

| Public Knowledge | 72.6% : 27.4% | 74.0% : 26.0% | 55.3% : 44.7% | 26 |

| Hybrid | 55.7% : 44.3% | 64.1% : 35.9% | 66.1% : 33.9% | 12 |

| Agnostic | 63.8% : 36.2% | 72.5% : 27.5% | 63.8% : 36.2% | 5* |

| Undefined | 28.8% : 71.2% | 32.5% : 67.5% | 57.5% : 42.5% | 5* |

| Tag | Onyx versus ChatGPT | Onyx vs Claude | GPT vs Claude | N |

|---|---|---|---|---|

| Answer Focus | 63.0% : 37.0% | 68.8% : 31.2% | 59.2% : 40.8% | 71 |

| Reasoning-Heavy | 67.7% : 32.3% | 72.1% : 27.9% | 59.6% : 40.4% | 30 |

| Ambiguous | 60.1% : 39.9% | 66.0% : 34.0% | 60.3% : 39.7% | 23 |

| Fact Lookup | 78.5% : 21.5% | 75.7% : 24.3% | 51.7% : 48.3% | 18 |

| Abstract Entities | 70.0% : 30.0% | 74.6% : 25.4% | 59.6% : 40.4% | 15 |

Latency (Time to Last Token, All Questions Available for Given System)

Lastly, largely just to demonstrate that all systems used a similar approach and not a Deep Research or another notably different framework, we also report on the average Time-to-Last-Token times:

| Platform | Avg Response Time (s) |

|---|---|

| Onyx | 34.7 |

| Claude | 36.2 |

| ChatGPT | 45.4 |

| Notion | 46.7 |

Onyx delivered the fastest average response time while also producing more comprehensive answers as reflected in the win rates above.

Discussion and Observations

Overall, these results show Onyx shows strong performance across all but one category under our evaluation approach. The exception is the "Undefined" category (very loosely defined or under-specified queries), where our agentic flow tends to attempt an answer rather than decline to answer. Whether this is preferable depends on user expectations.

We believe that the overall superior results are largely driven by two architectural choices:

1. Direct data indexing

Onyx indexes all connected data directly, enabling us to apply our hybrid search approach consistently across diverse data sources. Some competitors rely on MCP (Model Context Protocol) connections for at least some of their data connectors. While we have not isolated this variable in a controlled test, we believe direct indexing contributes meaningfully to retrieval consistency — particularly across heterogeneous document types. Whereas the win rates on first sight do not directly support this assertion, we find the average weighted score (across all judges) per category most telling in this case:

| Category | Onyx vs ChatGPT | Onyx vs Claude |

|---|---|---|

| Internal Knowledge | +16% | +30% |

| Public Knowledge | +10% | +13% |

| Overall | +9% | +19% |

We also see very good evidence for this in the two examples below where we compare the answers of Onyx, ChatGPT, and Claude. Particularly the second one clearly demonstrates that the systems using MCP connections were not able to retrieve highly relevant internal documentation.

2. Agentic orchestration

Our system's approach to query decomposition, tool selection, and iterative refinement appears well-suited to the kinds of complex, multi-source questions that enterprises care about. We also separate searching from reading for both, external and internal sources. That gives the system the ability to read the full document if needed to get the full context. Consider for example call transcripts: if only chunks are retrieved, a lot of critical context can get lost.

Some Examples

Below we share a few answer examples that we find instructive:

Question 1: "What are our guarantees on patching discovered vulnerabilities?"

Onyx

Our vulnerability patching/remediation guarantee is based on the assessed severity of the finding:

| Severity | Remediation / Patch Timeline |

|---|---|

| Critical | ≤ 30 days |

| High | ≤ 30 days |

| Medium | ≤ 60 days |

| Low | ≤ 90 days |

| Informational | As needed |

Additional details on how we operationalize this:

We evaluate severity for vulnerabilities identified from any source (scanning, vendor alerts, pen tests, bug bounty, etc.) and create service tickets for remediation when they’re risk-relevant (especially critical/high).

If something cannot be remediated within the standard timeline, the ticket must include a risk treatment plan and a planned remediation timeline.

ChatGPT

We commit to patching or otherwise remediating discovered vulnerabilities according to their severity. Specifically, critical and high-risk vulnerabilities are addressed within 30 days, medium-risk within 60 days, and low-risk within 90 days. Informational findings are handled on a case-by-case basis. If a vulnerability cannot be remediated within these timelines, we are required to document a risk treatment plan along with a planned remediation date. These commitments are documented in our operations security policy and referenced in our internal SLA documentation",

Claude

I'll search your Google Drive for information about vulnerability patching guarantees and policies.

Let me search with different terms to find your security or SLA documentation.

I found a System Description document that likely contains security policies. Let me fetch the full document.

I found relevant information in your Onyx System Description document. Let me also search for any specific SLA documentation that might have more detailed timelines.

Based on your Onyx System Description document, here's what I found regarding vulnerability patching guarantees:

Onyx utilizes vulnerability scanning software that checks source code for common security issues as well as for vulnerabilities identified in open source dependencies and maintains an internal SLA for responding to those issues. Additionally, Onyx engages an external security firm to perform quarterly vulnerability scans and annual penetration testing to look for unidentified vulnerabilities, and the product engineering team responds to any issues identified via the regular incident response and change management process.

However, the document mentions an "internal SLA" without specifying the exact timelines for different severity levels (e.g., critical vulnerabilities patched within X hours, high within Y days, etc.). Do you have a separate security policy document or customer-facing SLA that contains those specific remediation timeframes? If you're preparing this information for a customer inquiry or compliance questionnaire, you may want to check with your team about what specific commitments you're making.

Question 2: "does [COMPANY] have a preference for a more search-like interface?"

Onyx

Based on the internal records, [COMPANY] does lean toward a search-like interface, but the picture is nuanced:

🔍 Evidence for a Search Preference

In a meeting with the Onyx founders, [PERSON] (a software engineer at [COMPANY]) described their primary need as unified searching across internal data sources — Slack, Confluence, GitHub, Google Drive, etc. A key use case is on-call engineers searching for prior incidents (e.g., “where have we seen this before?”), which is inherently a search-oriented workflow.

They are currently using [OTHER VENDOR] primarily for its search functionality to support on-call engineers, and notably mentioned that they tried [OTHER VENDOR]’s assistant/chat features ~1.5–2 years ago and “didn't find it very useful” for their on-call use case.

There is also a mention that power users prefer a more traditional search experience where they can see options and references directly.

💬 But It's Not Black and White

Internal analysis suggests that while users initially gravitate toward the search interface for straightforward queries, there is also interest in the chat interface for more complex interactions — such as setting LLM parameters or auto-answering questions in Slack.

Feedback sessions were planned to provide more conclusive data, but at the time of the notes, it had only been the first week of use, so a definitive preference between search vs. chat was not fully established.

Key Takeaways

| Aspect | Detail |

|---|---|

| Primary use case | On-call issue triage — finding similar past threads/incidents |

| Current tool | [OTHER VENDOR] (search functionality only) |

| Chat experience | Tried [OTHER VENDOR]’s assistant ~2 years ago, found it not very useful |

| Initial user behavior | Users gravitate toward search for straightforward queries |

| Reason for exploring Onyx | Price (main driver) + data privacy/self-hosting concerns |

Bottom line: [COMPANY]’s core use case strongly favors a search-like interface — quick, multi-source retrieval for specific incidents and keywords. They haven't ruled out chat-style interactions, but their track record and stated needs suggest search is the higher priority.

ChatGPT

Based on prior internal discussion, there is no definitive preference yet from [COMPANY] for a purely “search-like” interface over a richer chat interface — but there are some signals worth noting.

What we’ve discussed internally

In the product Slack thread on this exact question, the current understanding was:

Users initially gravitate toward search for straightforward queries (quick, direct retrieval).

There’s a need to encourage exploration of chat, suggesting search may be the default behavior but not necessarily the preferred long-term modality.

Some users are using chat as a ChatGPT-style replacement, especially for more complex interactions (e.g., parameterized LLM usage, Slack automation).

Feedback was still early-stage (“first week of use”), and more structured feedback sessions were expected.

In short:

Early behavior leans search-first, but there’s demonstrated value and interest in richer chat workflows.

Interpretation

So far, it looks like:

Search-like UI works well for simple, known-item retrieval.

Chat-style UI becomes more valuable for complex, multi-step, or exploratory workflows.

No strong evidence that they explicitly prefer only search.

The question likely isn’t binary — it may be about progressive disclosure: search-first with optional conversational deepening.

If this is for roadmap thinking

The signal suggests:

A lightweight, fast search entry point is important.

But higher-value stickiness likely comes from richer workflows layered on top (follow-ups, summaries, orchestration, etc.).

If helpful, I can also:

Check whether there’s more recent customer feedback beyond this early thread.

Look into deal notes to see if any preference was explicitly mentioned in later calls.

Help frame this as a product positioning hypothesis.

Claude

Let me search for information about [COMPANY]’s product preferences regarding search-like interfaces. My web search returned results about [COMPANY & WHAT THEY DO], but nothing specifically about them preferring a “search-like interface.” This question could be referring to something internal at your organization or a specific context I’m not finding publicly.

Could you give me a bit more context? For example:

Are you referring to [COMPANY & WHAT THEY DO], or something else?

Is this about a specific product, tool, or internal discussion — like a preference for how their platform’s UI should work (search-based vs. browse-based)?

Did this come up in a meeting or internal document?

If it’s an internal discussion or document, I can search your Google Drive, Slack, or Fireflies meeting transcripts to find relevant context.

For the first question we believe that Onyx’ answer is the best as it very clearly finds and articulates the proper information, providing a holistic answer to the user’s question. ChatGPT’s answer is not wrong, but not as pointed and precise.

For the second question, Onyx’ answer is certainly much more specific and informative than ChatGPT’s. Onyx clearly found call information, apparently missed by both ChatGPT and Claude, which is clearly highly relevant to inform the user. In fact, Claude’s answer suggests the system did not even attempt any internal searches to construct the answer. Maybe a follow-up interaction with the user would have provided more relevant information, but the first interaction certainly fell short.

Finally, a couple of notable observations regarding the gap between ChatGPT Enterprise and Claude Enterprise - as Assistants and as Judges:

- We traced some of the answer quality/win rate differences between Claude and ChatGPT (vs. Onyx and vs. each other) to Claude frequently asking for clarifications rather than leveraging available context to attempt an answer directly. This is admittedly a somewhat subjective distinction. Some users may prefer clarification over assumptions. In our assessment however, it occurred more often than most users would likely find useful. This is reflected in the scores. We attempted to compensate by appending an explicit instruction to each question for Claude discouraging this behavior, but results did not improve under our evaluation criteria, and response times increased by approximately 10 seconds. Therefore, we report the results here without this modification.

- It is also interesting to note that the two models GPT-5.2 and Claude Opus 4.5 behaved rather differently when used as the models powering the judges, with each one being bias towards answers generated "by themselves".

We are not commenting on which model we consider to be the better judging model as these results inherently also depend on the prompts and other choices, but we consider the difference notable and interesting in its own right.

| Judging Model | Onyx Avg Win Rate vs ChatGPT | Onyx Avg Win Rate vs Claude |

|---|---|---|

| GPT-5.2 | 59.2% | 68.6% |

| Claude Opus 4.6 | 68.7% | 67.4% |

Overall, these results line up well with the success of our recent Deep Research benchmark performance using DeepResearch Bench, where we had achieved #1 ranking.

They are also supported by our customers, as evidenced by this quote:

“Onyx is answering thousands of questions a week at Ramp. We tried a variety of other AI tools but none had the same answer reliability as Onyx. It’s been a huge productivity boost as we continue to scale.” (Tony Rios, Director of Product Ops, Ramp)

5. Conclusions & Outlook

Our key finding is that Onyx outperformed the other evaluated vendors by approximately 2:1 in terms of pairwise win rates, with some variation across question categories.

The results of this benchmark helped us evaluate our solution relative to other vendors, identify where we excel and where we can rethink our approach, and better understand how answer quality varies across question types.

Areas we would like to improve include:

- More questions. N=99 provides directional signals but limits statistical power, particularly for smaller category slices. We aim to expand the set substantially in future tests.

- Broader data. Our internal dataset is smaller than that of many current and prospective customers. Patterns in larger, more diverse knowledge bases may differ.

- Independent verification. While we publish questions, rubrics, and some other information, the underlying internal documents and many of the answers cannot be shared. We recognize the limitations of this approach.

To address these gaps, we are planning on building a synthetic dataset that models the types of documents a larger enterprise would have.